R-rated content detection

HackerEarth has introduced R-rated content detection in which if a programming question or MCQ contains offensive or inclusive content, it will be highlighted in the platform and can be removed or changed if you want to. This feature was developed by HackerEarth so that the problem statements can be made appropriate for the candidates and they do not feel any bias or offense. The platform checks the whole question and detects the sentences that signify violence, hate, bias, or other negative emotions.

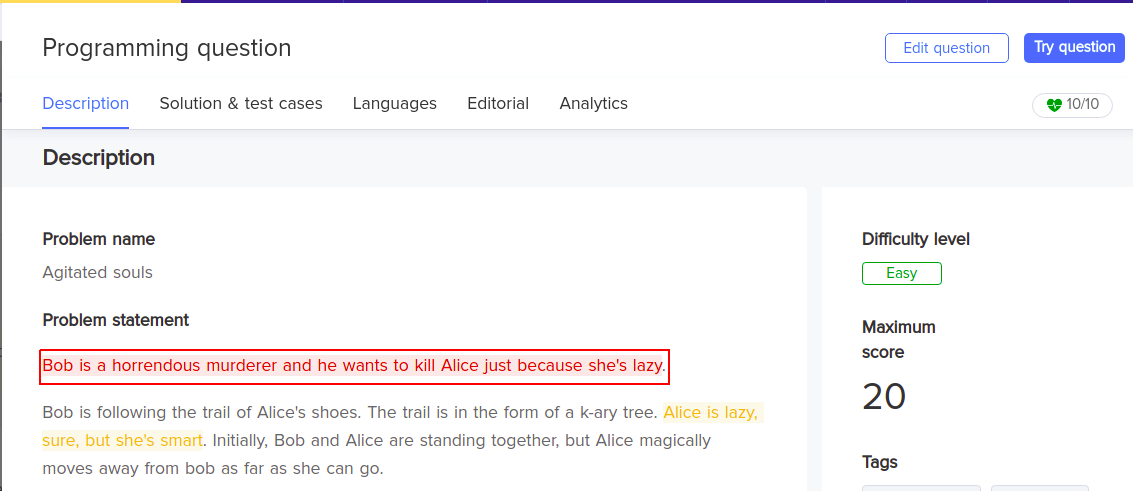

You can just open any of the questions in My library on the platform, and the flagged sentence will be highlighted in red or yellow color. You can then easily change the sentence or modify the sentence so that the question’s meaning remains intact even after removing the flagged words or sentences. For example, A is the enemy you want to murder. In this sentence, words like enemy and murder will be flagged and they will be highlighted so that you can make the alterations or modifications in the sentence if you want to. Words that can be categorized as offensive are killer, lazy, fat, etc.

How does R-rated content detection works in the recruiter?

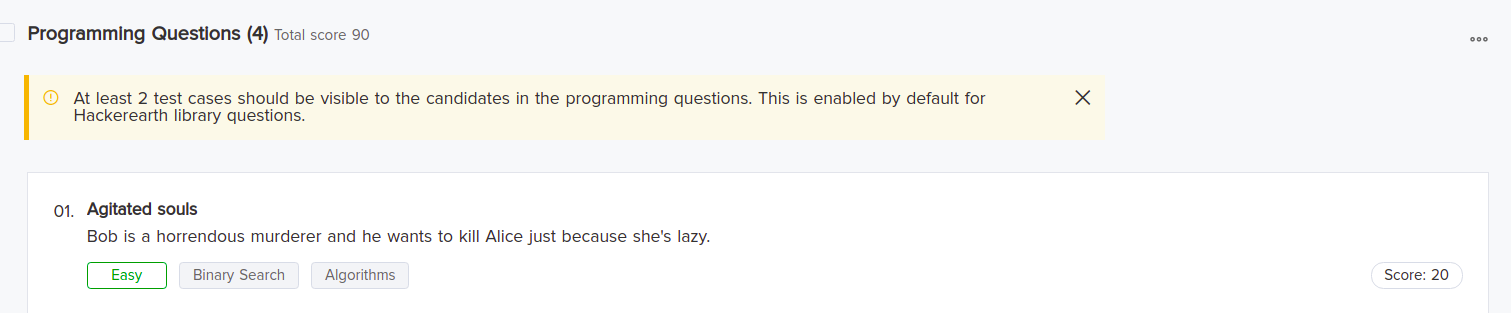

R-rated content detection works only on programming and MCQ questions. To check how the feature works, do the following:

-

Select programming or MCQ questions from my library.

-

Click on the question you want to check the content for. Here we will select ‘Agitated souls’

-

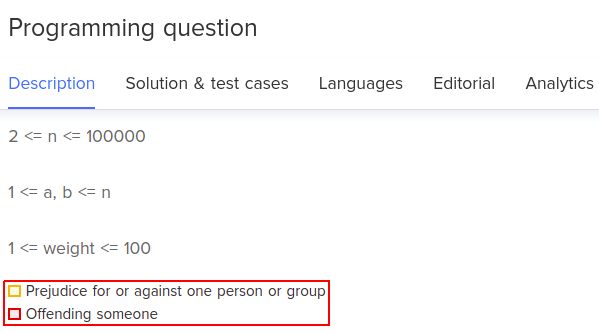

You can see that the flagged words or sentences are highlighted in various colors.

-

The colors signify the various criteria under which flagged words or sentences are classified into. They basically signify the various reasons due to which the words or sentences are flagged. They will be shown to you at the end of the question.

You can now edit the question and change the flagged words or the sentences.